In today's rapidly evolving technological landscape, remote IoT batch job processing has become an essential capability for organizations looking to harness the power of IoT data. As businesses increasingly rely on cloud-based solutions, understanding how to effectively manage and process large datasets remotely using platforms like AWS is crucial for success. This article delves into the intricacies of remote IoT batch jobs, providing practical examples and best practices to help you leverage AWS for efficient data processing.

Whether you're a developer, data scientist, or IT professional, this guide will equip you with the knowledge and tools needed to execute remote IoT batch jobs seamlessly. By the end of this article, you'll have a comprehensive understanding of how to design, deploy, and optimize batch processing workflows in a remote environment using AWS services.

As the demand for scalable and flexible solutions grows, remote IoT batch job processing has emerged as a key strategy for managing large-scale data operations. This article explores the fundamentals of remote IoT batch jobs, offering insights into AWS's capabilities and providing actionable examples to enhance your workflow.

Read also:Where Is Adam Lambert From Unveiling The Journey Of A Global Star

Understanding Remote IoT Batch Jobs

Remote IoT batch jobs refer to the process of executing computational tasks on IoT data in a distributed and scalable manner, typically leveraging cloud infrastructure. This approach allows organizations to process vast amounts of data efficiently without the constraints of on-premise resources. In this section, we'll explore the key concepts behind remote IoT batch jobs and their significance in modern data processing.

What Are Batch Jobs in IoT?

Batch jobs in IoT involve processing large datasets in batches rather than handling individual data points in real-time. This method is particularly useful for tasks that require significant computational power and time, such as data analysis, machine learning model training, and data transformation. By batching data, organizations can optimize resource utilization and reduce costs.

- Batch jobs are ideal for processing historical data.

- They enable the execution of complex algorithms on large datasets.

- Batch processing can improve data accuracy and consistency.

Why Remote Processing Matters

Remote processing eliminates the need for physical infrastructure, allowing organizations to scale their operations dynamically based on demand. With cloud platforms like AWS, businesses can access powerful computing resources on-demand, ensuring that their IoT batch jobs are executed efficiently and cost-effectively.

According to a report by Gartner, "Cloud-based solutions are becoming the default choice for enterprises due to their flexibility and scalability." This trend underscores the growing importance of remote IoT batch jobs in modern data management strategies.

Getting Started with AWS for Remote IoT Batch Jobs

AWS provides a robust suite of services designed to support remote IoT batch job processing. From compute resources to storage solutions, AWS offers everything you need to execute batch jobs seamlessly. In this section, we'll walk you through the essential components of AWS that make remote IoT batch processing possible.

AWS Batch: The Core Service for Batch Processing

AWS Batch is a fully managed service that simplifies the execution of batch computing workloads in the cloud. It automatically provisions compute resources and optimizes the distribution of batch jobs across available resources, ensuring efficient processing and cost management.

Read also:Exploring The World Of Bbw Chan Movie A Comprehensive Guide

- AWS Batch supports both EC2 and Spot Instances for flexible resource allocation.

- It integrates seamlessly with other AWS services, such as S3 for data storage and Lambda for serverless computing.

- The service provides detailed monitoring and reporting capabilities to help you track job progress and performance.

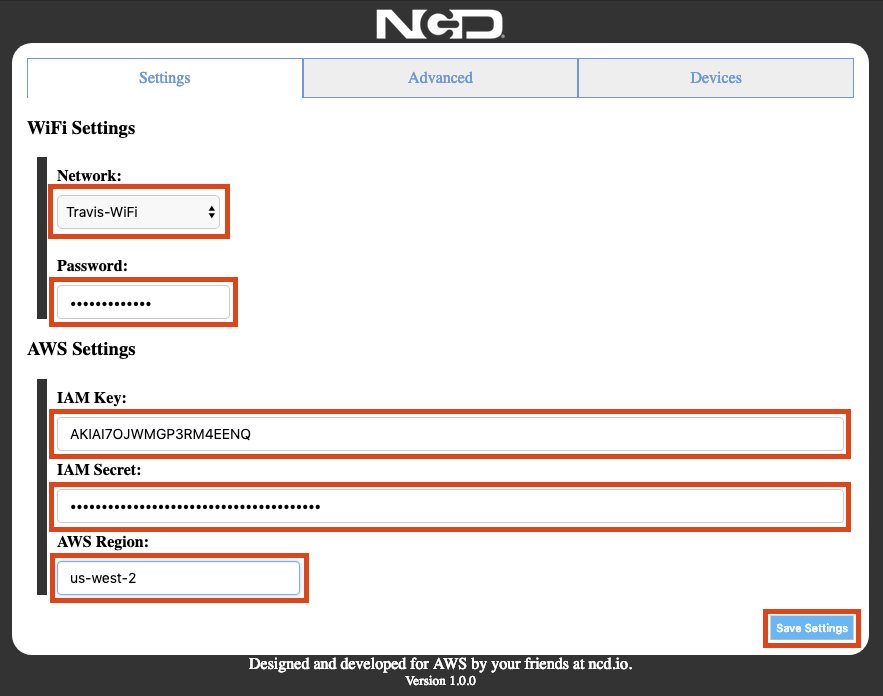

Setting Up Your AWS Environment

Before you can start executing remote IoT batch jobs on AWS, you'll need to set up your environment. This involves creating an AWS account, configuring IAM roles and permissions, and setting up the necessary services. Below are the key steps to get started:

- Create an AWS account and log in to the AWS Management Console.

- Set up IAM roles with appropriate permissions for batch job execution.

- Configure AWS Batch by creating compute environments and job queues.

- Integrate S3 buckets for data storage and retrieval.

Designing Efficient Remote IoT Batch Jobs

Designing efficient remote IoT batch jobs requires careful planning and consideration of various factors, including data volume, processing requirements, and resource allocation. In this section, we'll explore best practices for designing batch jobs that maximize performance and minimize costs.

Optimizing Data Flow

Efficient data flow is critical for successful remote IoT batch job processing. By optimizing how data is ingested, processed, and stored, you can significantly improve the performance of your batch jobs. Consider the following strategies:

- Use S3 for scalable and cost-effective data storage.

- Implement data compression techniques to reduce storage and transfer costs.

- Partition data to enable parallel processing and faster job execution.

Choosing the Right Compute Resources

Selecting the appropriate compute resources is essential for ensuring optimal performance. AWS offers a variety of instance types, each designed for specific workloads. When choosing compute resources for your remote IoT batch jobs, consider the following:

- Use EC2 instances for predictable workloads and Spot Instances for cost-sensitive tasks.

- Select instance types based on the computational requirements of your batch jobs.

- Monitor resource utilization and adjust instance configurations as needed.

Implementing Remote IoT Batch Jobs on AWS

Implementing remote IoT batch jobs on AWS involves several steps, from defining job definitions to monitoring job execution. In this section, we'll guide you through the process of implementing batch jobs using AWS services.

Defining Job Definitions

A job definition specifies the parameters and settings for a batch job, including the container image, resource requirements, and environment variables. To define a job definition in AWS Batch:

- Create a Docker image containing the necessary code and dependencies for your batch job.

- Upload the Docker image to Amazon Elastic Container Registry (ECR).

- Define a job definition in the AWS Management Console or using the AWS CLI.

Executing Batch Jobs

Once your job definitions are set up, you can submit batch jobs for execution. AWS Batch automatically provisions compute resources and distributes jobs across available instances. To execute a batch job:

- Submit the job to the appropriate job queue.

- Monitor job progress using the AWS Management Console or CloudWatch.

- Retrieve job results from the specified output location.

Scaling Remote IoT Batch Jobs

Scaling remote IoT batch jobs is essential for handling increasing data volumes and computational demands. AWS provides several tools and features to help you scale your batch processing workflows effectively. In this section, we'll explore strategies for scaling remote IoT batch jobs on AWS.

Using Auto Scaling

AWS Auto Scaling allows you to dynamically adjust compute resources based on demand. By configuring scaling policies, you can ensure that your batch jobs always have the resources they need to execute efficiently. Consider the following best practices:

- Set up target tracking scaling policies to maintain optimal performance.

- Use scheduled scaling to accommodate predictable workload patterns.

- Monitor scaling activities to identify and address potential bottlenecks.

Optimizing Costs

Scaling remote IoT batch jobs can lead to increased costs if not managed properly. To optimize costs, consider the following strategies:

- Use Spot Instances for non-critical workloads to reduce costs by up to 90%.

- Implement cost allocation tags to track and analyze spending.

- Regularly review and adjust resource configurations to ensure efficiency.

Monitoring and Managing Remote IoT Batch Jobs

Effective monitoring and management are crucial for ensuring the success of remote IoT batch jobs. AWS provides several tools and services to help you monitor job performance, troubleshoot issues, and optimize workflows. In this section, we'll explore the key tools and techniques for managing remote IoT batch jobs.

Using CloudWatch for Monitoring

Amazon CloudWatch is a powerful monitoring service that provides insights into the performance and health of your AWS resources. By setting up CloudWatch metrics and alarms, you can proactively identify and address issues with your batch jobs. Consider the following:

- Monitor job execution times and resource utilization to identify bottlenecks.

- Set up alarms to notify you of potential issues or anomalies.

- Use CloudWatch Logs to analyze job output and debug issues.

Managing Job Queues

Managing job queues is essential for organizing and prioritizing batch jobs effectively. AWS Batch allows you to create multiple job queues with different priority levels, ensuring that critical jobs are executed first. To manage job queues:

- Create separate queues for different types of jobs or workloads.

- Assign priority levels to queues based on business needs.

- Monitor queue performance and adjust settings as needed.

Best Practices for Remote IoT Batch Job Processing

Adopting best practices is key to achieving success with remote IoT batch job processing on AWS. In this section, we'll summarize the best practices for designing, implementing, and managing remote IoT batch jobs effectively.

- Plan your batch jobs carefully, considering factors such as data volume, processing requirements, and resource allocation.

- Optimize data flow by using efficient storage solutions and data compression techniques.

- Select appropriate compute resources based on the computational demands of your batch jobs.

- Monitor job performance and resource utilization to identify and address potential issues.

- Regularly review and adjust your workflows to ensure optimal performance and cost efficiency.

Conclusion

Remote IoT batch job processing is a powerful strategy for managing large-scale data operations in today's digital landscape. By leveraging AWS services, organizations can execute batch jobs efficiently and cost-effectively, enabling them to harness the full potential of IoT data. This article has provided a comprehensive overview of remote IoT batch jobs, offering practical examples and best practices to help you succeed.

We encourage you to apply the insights and strategies discussed in this article to your own workflows. By doing so, you'll be well-equipped to tackle the challenges of remote IoT batch job processing and achieve your business objectives. Don't forget to leave a comment below or share this article with your network to continue the conversation!

Table of Contents

- Understanding Remote IoT Batch Jobs

- Getting Started with AWS for Remote IoT Batch Jobs

- Designing Efficient Remote IoT Batch Jobs

- Implementing Remote IoT Batch Jobs on AWS

- Scaling Remote IoT Batch Jobs

- Monitoring and Managing Remote IoT Batch Jobs

- Best Practices for Remote IoT Batch Job Processing

- Conclusion